I simply renamed the OpenImageDenoise node to Albedo-denoised and Normal-denoised.

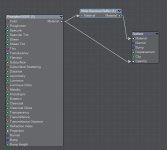

In my node setup above there are 3 OpenImageDenoise (of which only 1 has the tick box of Cleaned Aux checked) and 2 Get-bounced-buffer nodes.

For a complete setup here some workflow tips:

You do need the latest Intel Denoiser version as well as Dpont's filters.

What you do next is place an extra material node in your materials to 'capture' the bounced rays into a buffer that will be used in the Node Image Filter as a 'Get Bounced Buffer' node as shown above. Don't worry about not selecting the RAW and NORMAL outputs from the Render Buffer node as this will all be dealt with via the 'Bounced Buffer' node. So only the beauty (Color) pass is needed.

There are 2 ways of inserting the material node into your materials;

- Manually --> just look up the 'Write Bounced Buffer' material node and insert it between the Surface node and your shader.

- Automatically --> Use Dpont's Python script to insert (or take away) the buffer automatically in ALL your materials. This is what I always use.

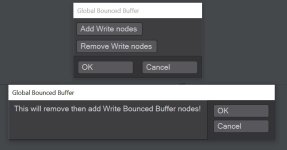

View attachment 151232

Click on Add Write nodes:

View attachment 151233

You want to have your final material setup like this:

View attachment 151234

Make sure you have your shader go to the OpenGL input as well otherwise everything will turn grey in your OpenGL display.

If you don't have many surfaces perhaps it is wiser to add the buffer node manually as it will have some overhead for materials that you don't need the bounced rays captured.

You can turn off some bounced buffers in the Render Properties/Buffer section as well but I never bother as the speed gain is not that dramatic.

What I have noticed is that a very highly anti aliased normal map works best getting a detailed denoised image. So for a final image you could render the normal pass separate with all the lights turned off as well as ray traced shadows. Now you can crank up anti aliasing to high and generate a smooth normal pass by using the 'Get Global Buffer' node to save the pass. Then denoise the results.